ART-Challenge with UltraHD/4K + 3D/VR hardware accelerated, relatime HDMI/RTP/WebRTC live streaming and communication - portable device

Why?

For last months I have been working for new product based on real-time 3D/VR live streaming into the mobile browser. It was a huge challenge to deliver such a platform to the market because there are tons of missing, very tiny elements which must be done from the scratch. It affects mechanics, electronics, software and basically entire architecture on every level. This journey has shown me that using brute-force model we can solve a lot of problems if we drop the matter of price, size and common usability. However there are problem which are not trivial to solve or simply not to buy! One of them is FullHD 3D/VR ready camera which would fit some generic use cases.

In my opinion it would be worth to research more deeply into 3D camera topic, VR streaming topic and idea of bringing a "portable 3D/VR platform" to the regular homes.

As I said this is very complicated topic. It requires huge computing power, high precision of optics and tons of lines of software. Fortunately, there many open source hardware & software projects right now, which are like "dust in the wind" - if you do not have recipe for how to connect them together. If you know clearly your goal, which is very specific in this case, I claim that building this very advanced platform is possible right now at my house basement!

Why no one did it before?

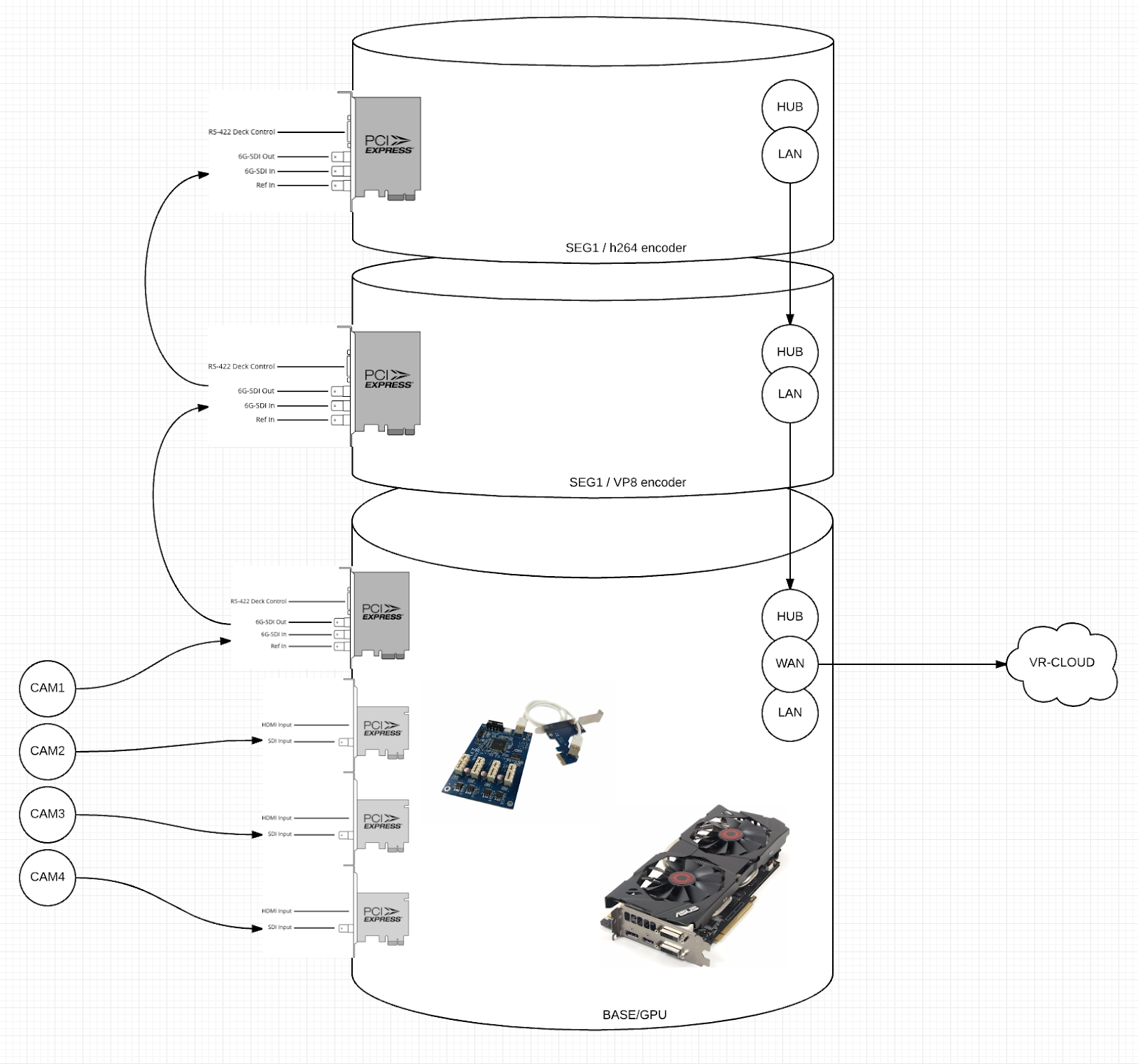

Actually it has been done for many times in last years. The problem is about the size, the price and incredible complexity on every level (cameras, converters, cables, server towers etc). Most of this usages require a lot of power and are not portable! Most of them has been built using brute-force with the goal to deliver anything just WORKING!

Below a little draft overview of such platform which requires: video/audio inputs, GPU for processing video and many CPUs for encoding if not supported by hardware.

What are benefits of this challenge for business and home usage?

REDUCE IN COST OF 75% - comparing to "obvious" brute-force solutions

REDUCE IN SIZE OF 75% - comparing to "obvious" brute-force solutions

INCREASED MOBILITY - must be small and portable

INCREASED CONFIGURABILITY - must be easy to configure

INCREASED EFFICIENCY

FULL REAL-TIME OPERATIONS

In a few words I wanna use upcoming new open source hardware platform and open source software for media processing and communication to deliver small, powerful, customizable device for professional and home usage within incredible and fascinating VR world!

The GOALs

FEATURE | PROTOTYPE (June 2016) | NEXT GENERATIONs (December 2016) |

Size | maximum size of single Mac-mini | 1 x Mac-Mini |

Configurable handler/distance for 3D effect | YES, 32-150mm | YES, 32-100mm, controlled via API |

Adjustable FOV | YES, multiple lenses | YES, multiple lenses |

Number of cams | 2 or 1x3D | 6 or 3x3D |

Max resolution per cam | 1080p30 | 4k30 |

MIC stereo audio support | YES | YES |

Video stitching in 3D/VR mode | YES | YES, for all sets of cams |

Chroma keying | YES | YES |

Distortion cancellation | YES, for 180x192 degrees lens | YES, for many lenses |

Hardware video encoding | VP8, h264 | VP8, VP9, h264, h265 |

Hardware audio encoding | Opus, AAC, MP3 | Opus, AAC, MP3 |

3D/VR output | HDMI, RTP (3840x1080) | HDMI, WebRTC, RTP |

Simple system Agent + API + CLI | YES | YES |

Speakers - stereo | NO | YES |

Bluetooth headset support | NO | YES |

Box-agent 2.0 | NO | YES |

Advanced REST API + CLI manager | NO | YES |

Intelligent Chroma Keying with faces and objects tracking | NO | YES |

WebRTC “client” mode (VP8, Opus) | NO | YES |

WebRTC bridge in “edge” mode (VP8, Opus) | NO | YES |

3D rendering with 2D output | NO | YES |

Web panel | NO | YES |

Extended REST API | NO | YES |

Extended objects recognition | NO | YES |

Advanced pipelines tuning | NO | YES |

WebRTC bridge in “origin” mode (VP8, Opus) | NO | YES |

TURN/STUN server | NO | YES |

VR-BOX Cloud integration | NO | YES |

Estimated cost | up to $1199 | --- |

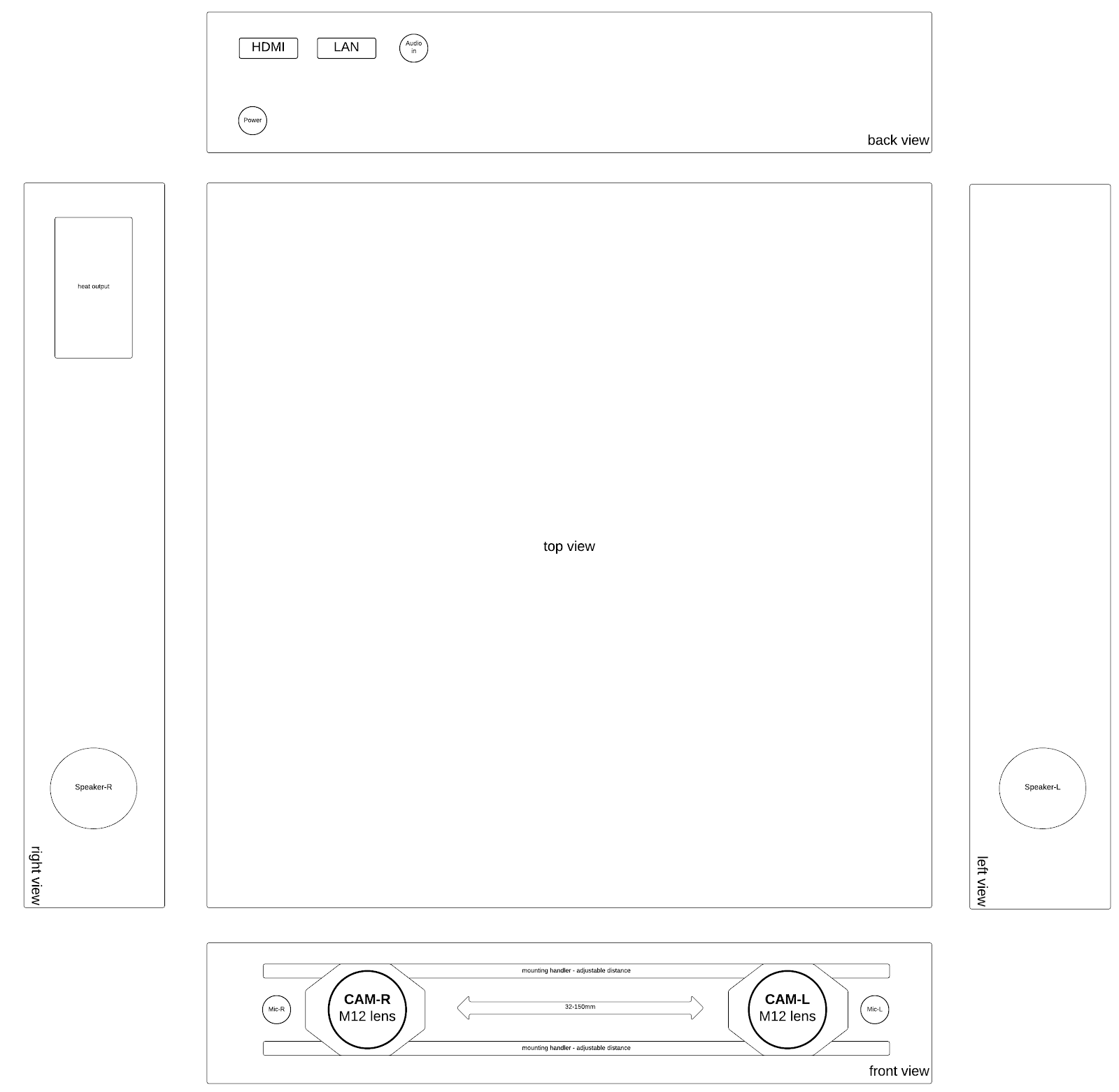

How could it look like?

The idea is to build very compact, portable platform which would combine CPUs, GPUs, CAMs, encoders, converters, bridges, storage and other required elements into small box.

Inputs:

- CAM-L + configurable lens and position

- CAM-R + configurable lens and position

- MIC-L

- MIC-R

- Audio-IN (optional)

- Power

Outputs:

- HDMI

- LAN (RTP, WebRTC)

What is the practical use case?

- 3D/VR-based conferencing

- Home Studio Streaming

- Professional Studio Streaming

- Medical services

- Entertainment services

- Anything you can imagine...

What's next?

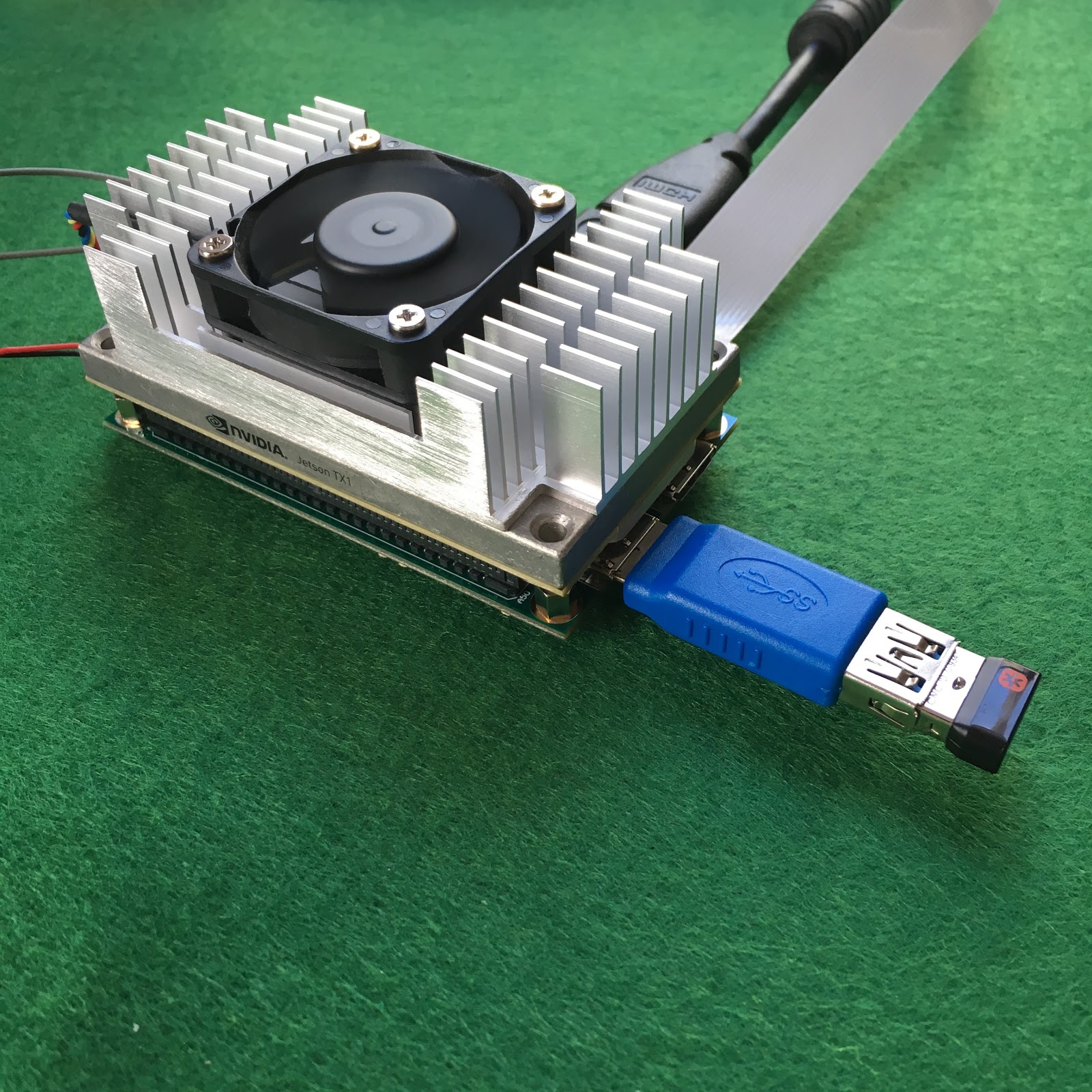

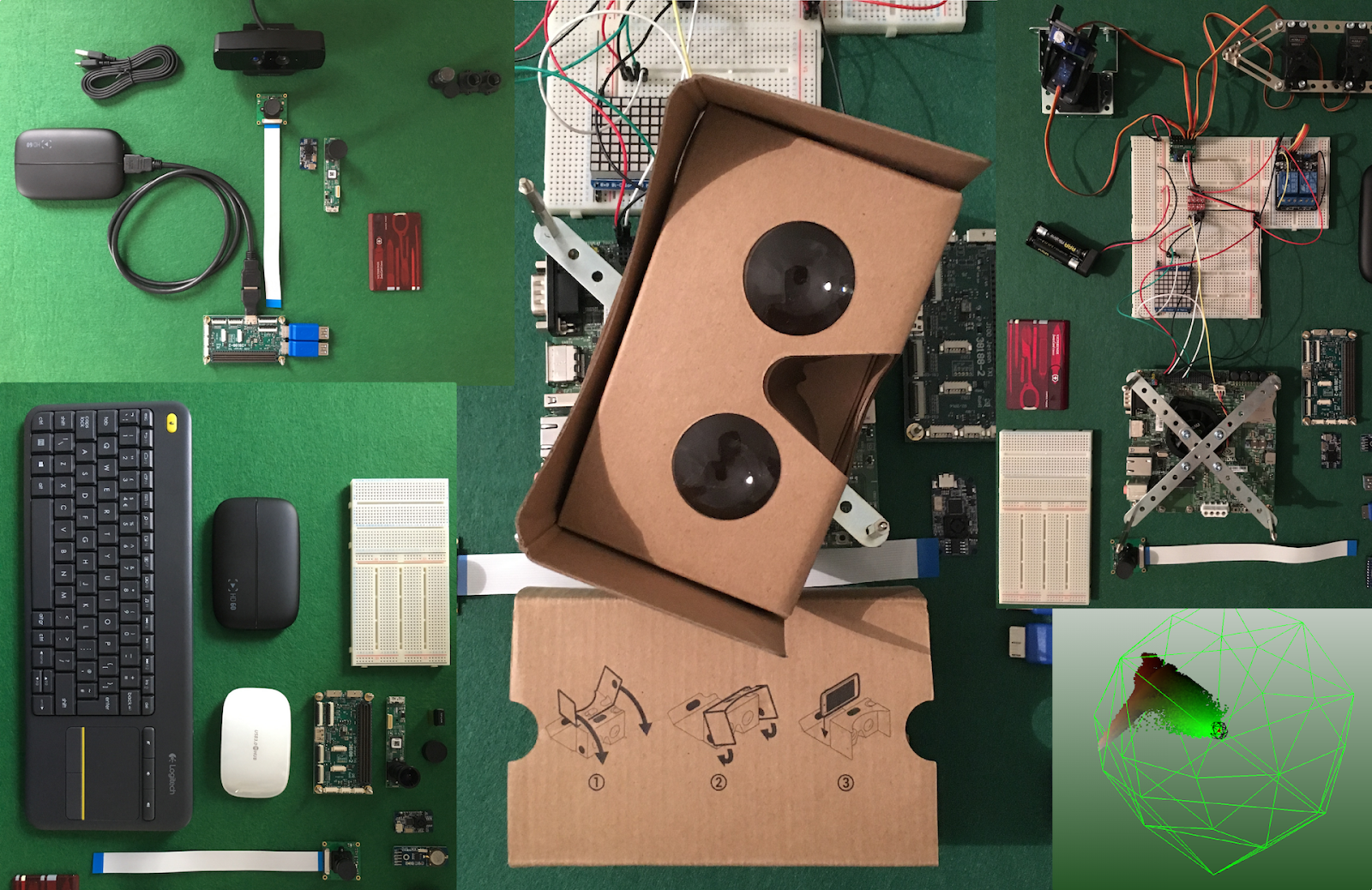

I have already spent a lot of time on research of portable CPU/GPU platform which would be a heart of such device. I have also researched a multiple camera and lens options which would capture stereoscopic video and audio. Some of this components are on the way to my lab. The other ones are waiting to be officially released to the market by end of March 2016.

The most realistic date for having all required components is the beginning of April 2016. It is because incredible advanced components are gonna be released to the market in next 1-2 months. Some of them are designed and produced in US, some of them in Germany. Let's hope there will be no delay on any team:)

I am gonna share my first steps in the following blogposts. At this time I wanna say that I do not consider to use any of common used and expensive solutions like: GoPro Hero, Decklink converters, Decklink video capture cards, advanced CPU like Intel Skylake and high efficient GPU systems.

Plan (starting on 28 February 2016) - "A brief history of VR":

- Art 3D/VR challenge – week 1 – Abstract overview

- Art 3D/VR challenge – week 2 – Looking for performance

- Art 3D/VR challenge – week 3 – FullHD processing on portable GPU vs CPU

- Art 3D/VR challenge – week 4 – Hardware encoding on portable GPU

- Art 3D/VR challenge – week 5 – Media framework on portable GPU

- Art 3D/VR challenge – week 6 – OpenGL/OpenCV on portable GPU

- Art 3D/VR challenge – week 7 – 3D/VR tricks using media frameworks and GPU

- Art 3D/VR challenge – week 8 – Configurable cameras setup

- Art 3D/VR challenge – week 9 – Audio input and output

- Art 3D/VR challenge – week 10 – Controlling and management of media pipeline

- Art 3D/VR challenge – week 11 – REST API and CLI tooling

- Art 3D/VR challenge – week 12 – HDMI and RTP output

- Art 3D/VR challenge – week 13 – Advanced tuning and management

- Art 3D/VR challenge – week 14 – Introduction to embedded WebRTC client

- Art 3D/VR challenge – week 15 – Introduction to Intelligent Chroma Keying

- Art 3D/VR challenge – week 16 – Introduction to Intelligent Chroma Keying part 2

- Art 3D/VR challenge – week 17 – Embedded on-board 3D scene rendering

- Art 3D/VR challenge – week 18 – Embedding VR video into 3D scene

- Art 3D/VR challenge – week 19 – 3D into 2D scene dumping

- Art 3D/VR challenge – week 20 – Introduction to embedded WebRTC server

- Art 3D/VR challenge – week 21 – 3D/VR and 2D live streaming

Contribution

Feel free to contact me if you are interested in meeting the team and contribution to this project in any programming language (go, php, ruby, js, node.js, objective-c, java...). This project is parked on Github.

See my contact page if required.

© COPYRIGHT KRZYSZTOF STASIAK 2016. ALL RIGHTS RESERVED

Comments