Future of AI driven Virtual Try-On

Since the beginning of summer 2020, I'm involved in a pretty unique project in the area of the fashion industry. To be more specific, it's the technology for supporting the virtual try-on for both: online and offline shopping!

You might find on the internet a lot of research around virtual fitting rooms, and also some early-stage solutions, but in reality, there is no usable product for consumers yet. All big players are chasing for technology which would re-shape the clothes purchase behaviors and increase the consumer experience, but in reality, it's a very complex problem.

These days most of the advanced research is focused on multiple topics

- Rendering

- 3D human body - including the face, hands, feet, and including poses & expressions

- 3D human apparel features - extra layers on the body including clothes and accessories

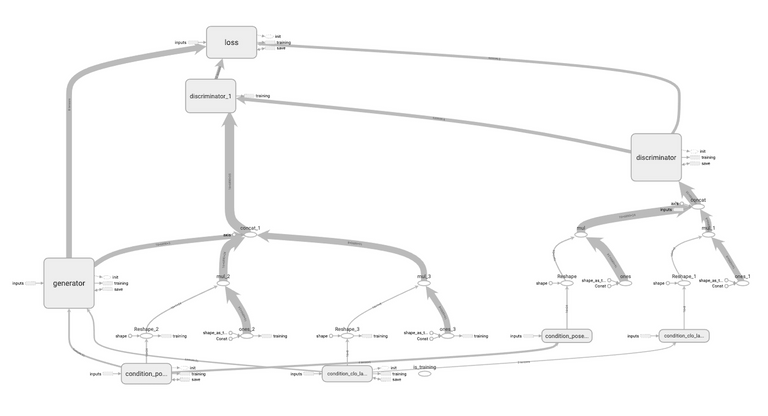

- AI training and inference

- Recognition of human pose, expression, body dimensions using 2D/3D/4D signals (photos, 3D scans, volumetric capture)

- Learning from garments - extraction of 2D/3D/4D physical parameters and unifying into re-usable models

- Learning from human bodies - extraction of 2D/3D/4D physical parameters and unifying into re-usable models

- Estimation of user avatar - reconstruction of physical parameters and unifying model using as little data as possible (eg 1 photo)

- Estimation of virtual try-on look - estimation of 3D model of a 3D avatar wearing the 3D garment

- Capturing

- Hardware

- single stereo camera stream - it's achieved by high-quality image (CMOS) sensors OR infrared (IR) sensors OR laser (LiDAR) scanning OR light-field sensors. You can find great results using (cheap) Intel RealSense, StereoLabs, Azure cameras. As result, the camera produces a depth map and a point cloud of limited "FOV"

- multi stereo camera stream - it's achieved by structured layout/mounting of stereo cameras around the object. All cameras are synchronized in time. As result, the system produces a complete depth map and a point cloud that can be transformed into a wireframe and then mesh.

- Tools

- Basic: stereo recording, point cloud stitching, wireframe, mesh, export

- Advanced: calibration, point cloud noise reduction, GPU acceleration

- Hardware

Even though the list is already long, this is just the tip of the mountain if you think about the practical implementation of such technology into the existing fashion retail chain.

In my opinion (Q3/2020), the most advanced research stack with the highest chance of adoption on the market is the achievement of Max Planck Institutes researchers. Of course, the impressive result is possible due to the previous work of a great group of researchers around the world.

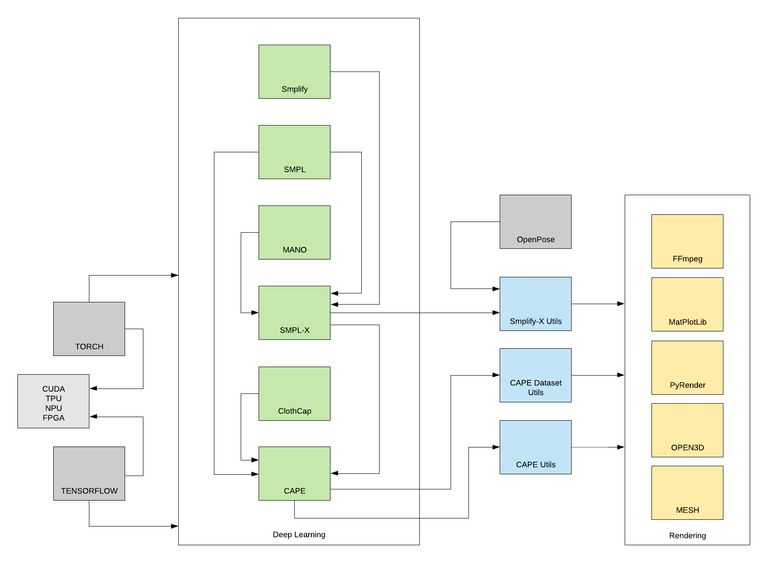

So, why the MPI seems a good fit? Well, the strategy of breaking the problem into multiple problems (4D scanning, 3D modeling, AI learning, AI inference, visualizations, rich tooling, great documentation) and close collaboration seems to be a good way to go.

My finding regarding the MPI stack (it might still include some errors, but in general worked for me)

AI Research

- https://cape.is.tue.mpg.de/

- https://smpl.is.tue.mpg.de/

- http://smpl-x.is.tue.mpg.de/

- https://mano.is.tue.mpg.de/

- http://smplify.is.tue.mpg.de/

- http://clothcap.is.tue.mpg.de/

Tools

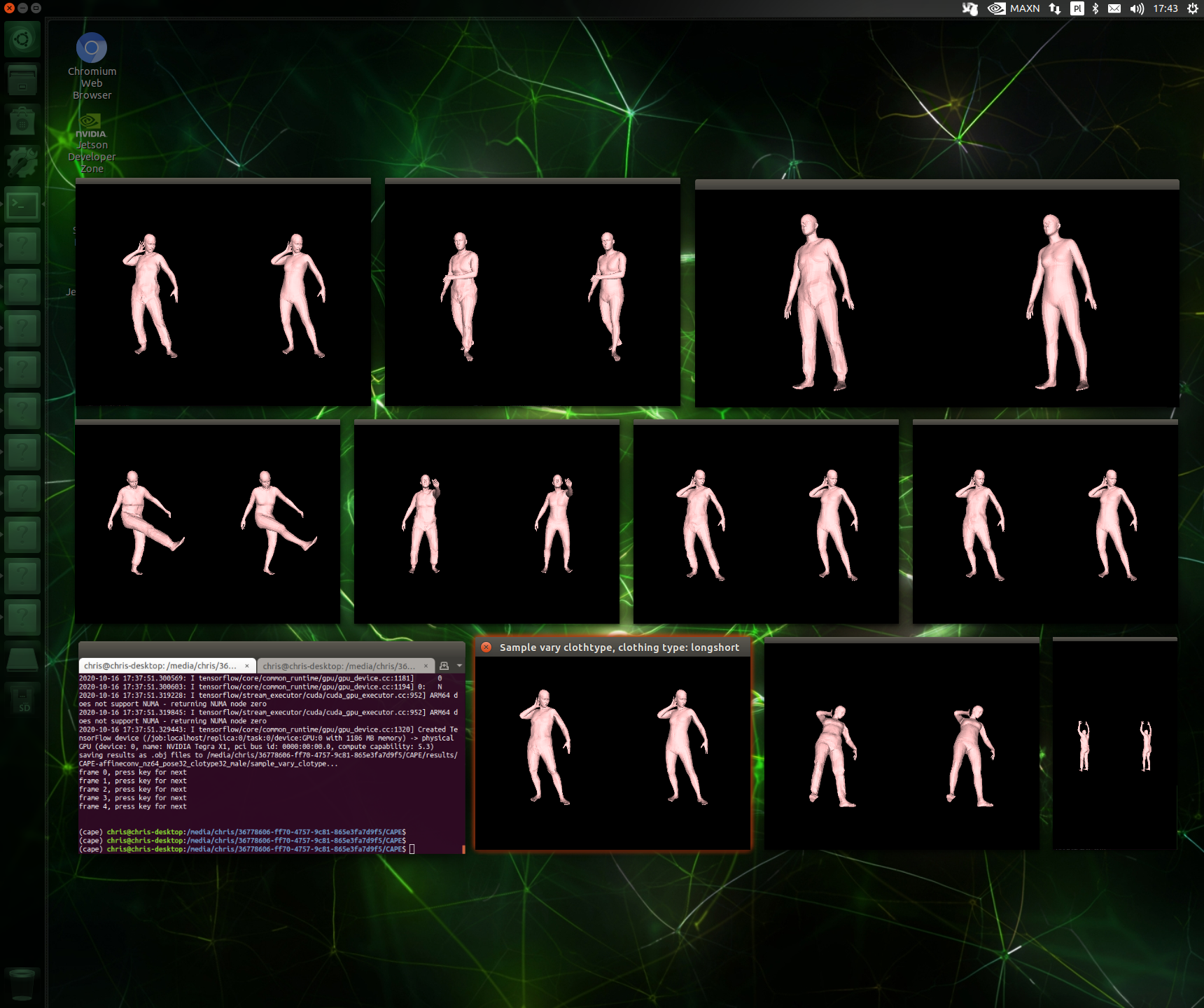

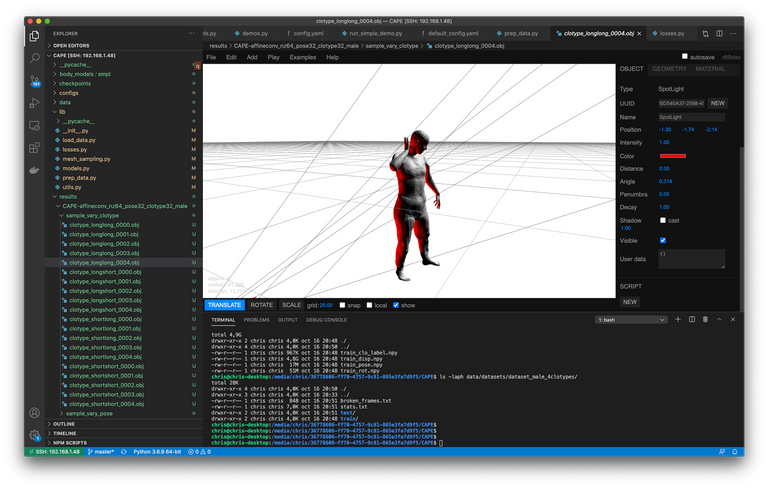

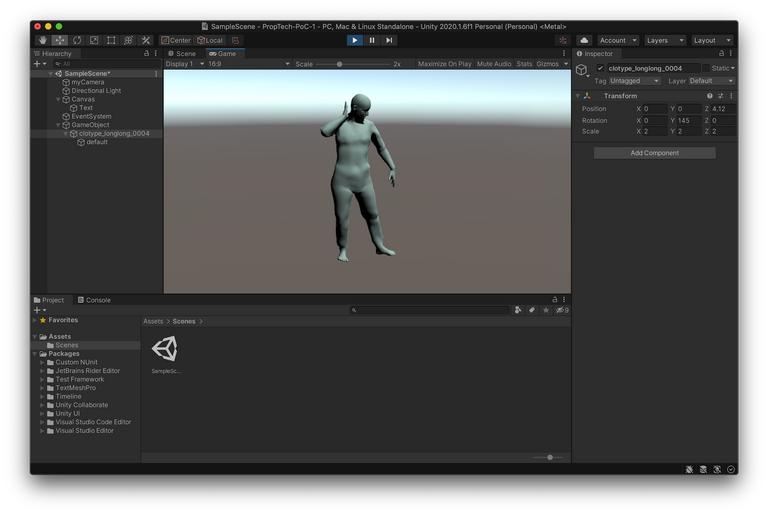

I was able to succeed and download all resources, setup the environment, and run CAPE pipelines. However, all those tasks are not trivial and require a lot of computing power. I started my ecosystem on Jetson TX-1, but then quickly moved to Jetson Xavier AGX (30+ TFLOPS, 512 CUDA Volta core, 64 Tensor cores, 32GB RAM).

All screenshots, photos, videos in this blog post are taken from my home-lab environment.

Comments